Models for Fine-tuning and Serving - Predibase

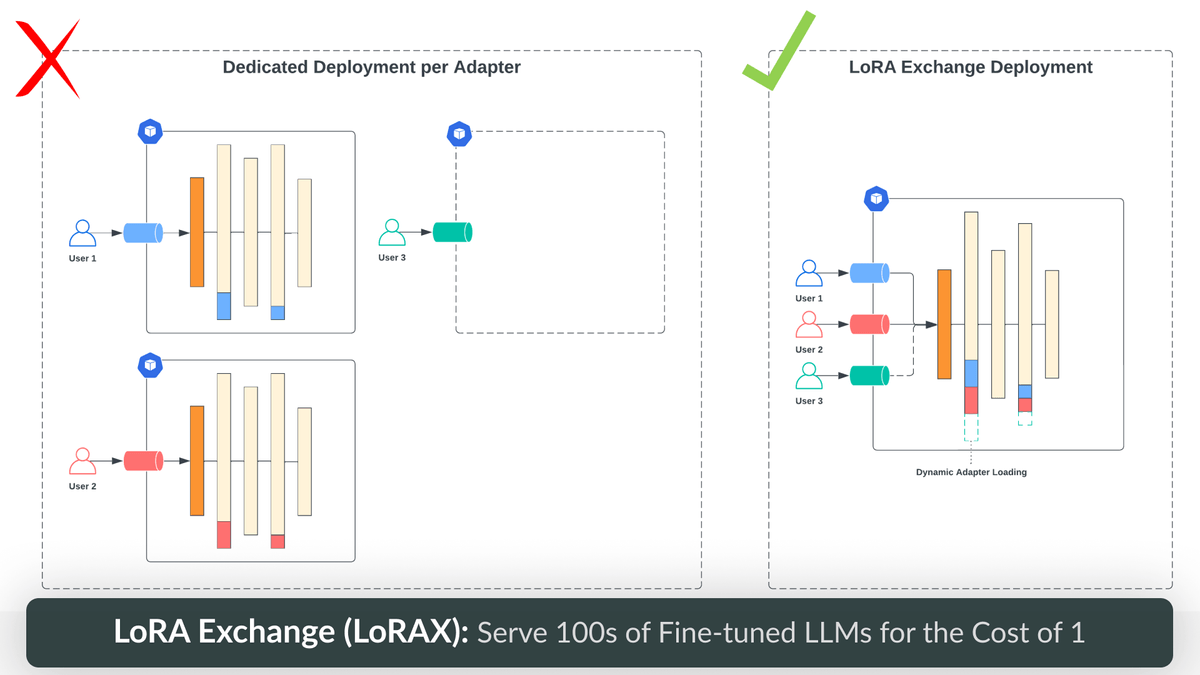

Predibase offers the largest selection of open-source LLMs for fine-tuning and inference including Llama-3, CodeLlama, Mistral, Mixtral, Zephyr and more. Take advantage of our cost-effective serverless endpoints or deploy dedicated endpoints in your VPC.| predibase.com