AI Benchmarking Dashboard | Epoch AI

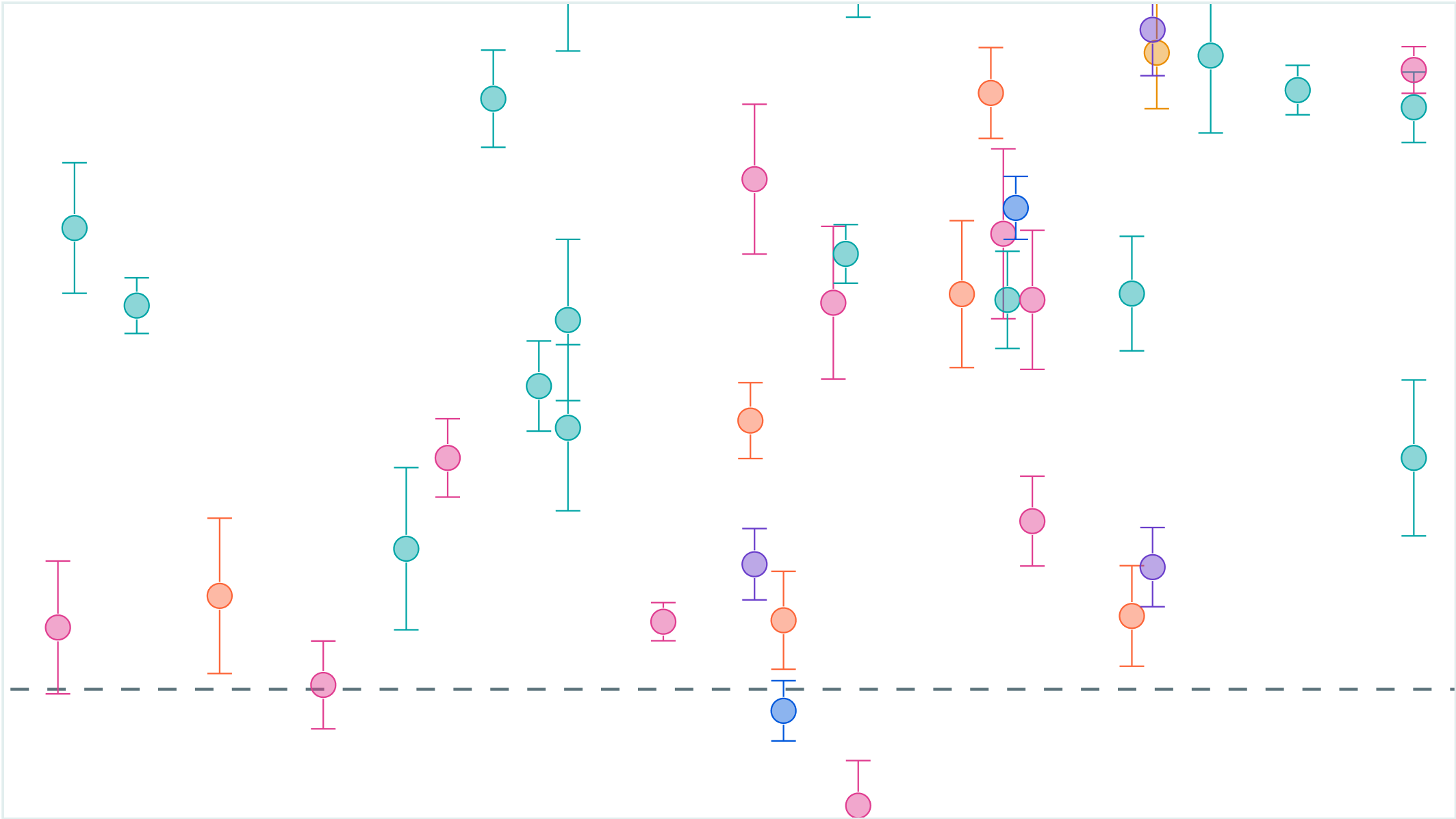

Our database of benchmark results, featuring the performance of leading AI models on challenging tasks. It includes results from benchmarks evaluated internally by Epoch AI as well as data collected from external sources. The dashboard tracks AI progress over time, and correlates benchmark scores with key factors like compute or model accessibility.| Epoch AI