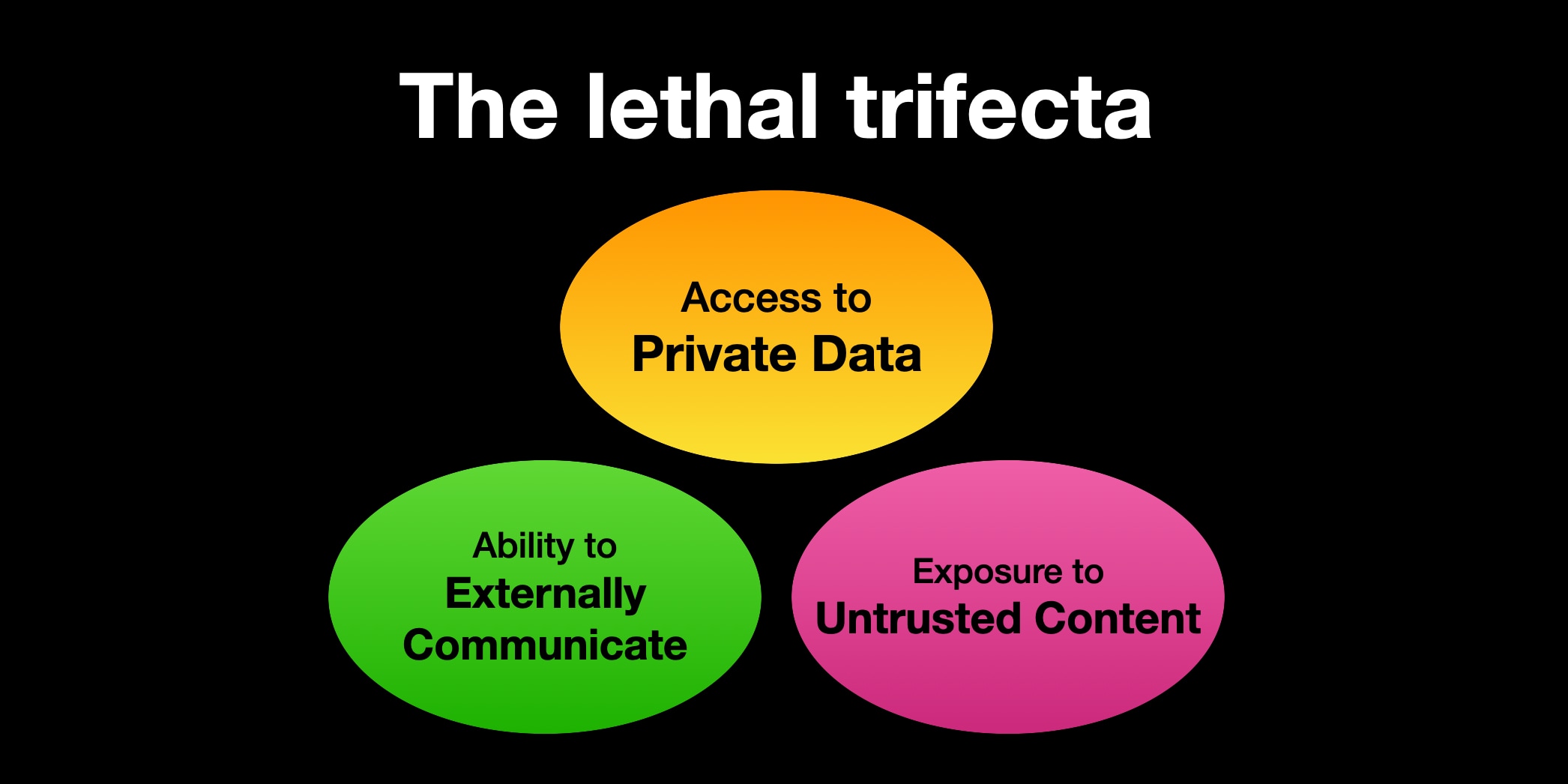

The lethal trifecta for AI agents: private data, untrusted content, and external communication

If you are a user of LLM systems that use tools (you can call them “AI agents” if you like) it is critically important that you understand the risk of …| Simon Willison’s Weblog