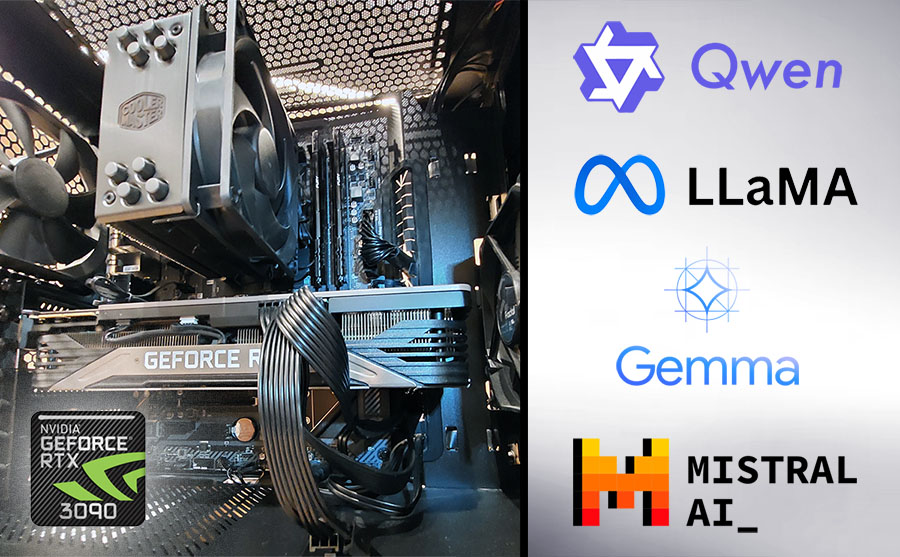

RTX 3090 and Local LLMs: What Fits in 24GB VRAM, from Model Size to Context Limits

Learn exactly which quantized LLMs you can run locally on an RTX 3090 with 24GB VRAM. This guide covers model sizes, context length limits, and optimal quantization settings for efficient inference.| Hardware Corner