Finding gliders in the game of life — Alignment Research Center

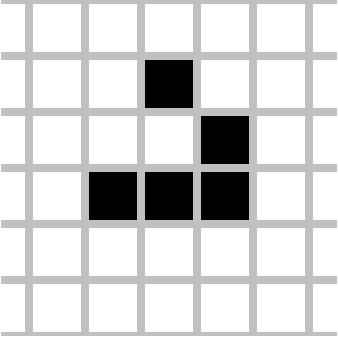

ARC’s current approach to ELK is to point to latent structure within a model by searching for the “reason” for particular correlations in the model’s output. In this post we’ll walk through a very simple example of using this approach to identify gliders in the game of life.| Alignment Research Center