Large Language Models (LLMs) have achieved excellent performances in various tasks. However, fine-tuning an LLM requires extensive supervision. Human, on the other hand, may improve their reasoning abilities by self-thinking without external inputs. In this work, we demonstrate that an LLM is also capable of self-improving with only unlabeled datasets. We use a pre-trained LLM to generate "high-confidence" rationale-augmented answers for unlabeled questions using Chain-of-Thought prompting an...| arXiv.org

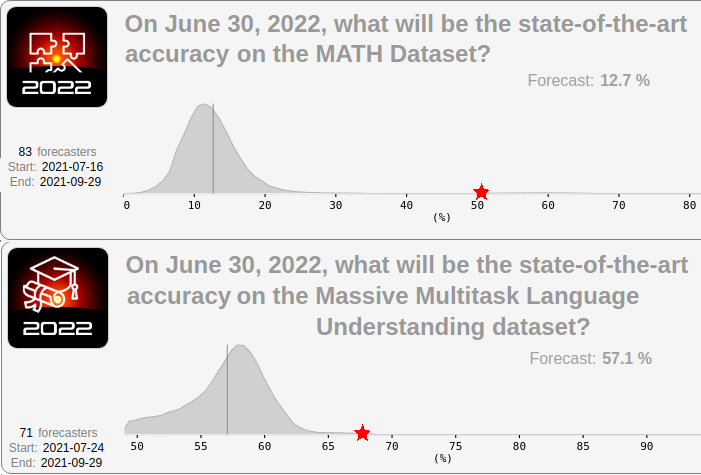

Thanks to Collin Burns, Ruiqi Zhong, Cassidy Laidlaw, Jean-Stanislas Denain, and Erik Jones, who generated most of the considerations discussed in this post. Previously [https://bounded-regret.ghost.io/ai-forecasting-one-year-in/], I evaluated the accuracy of forecasts about performance on the MATH and MMLU (Massive Multitask) datasets. I argued that most people,| Bounded Regret

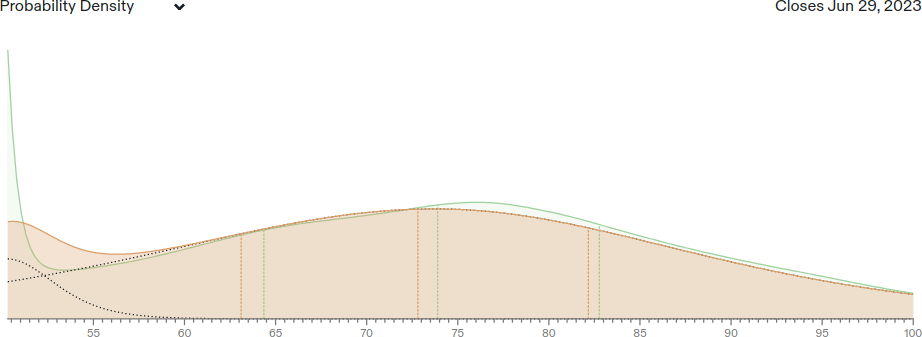

Last August, my research group created a forecasting contest [https://bounded-regret.ghost.io/ai-forecasting/] to predict AI progress on four benchmarks. Forecasts were asked to predict state-of-the-art performance (SOTA) on each benchmark for June 30th 2022, 2023, 2024, and 2025. It’s now past June 30th, so we can evaluate| Bounded Regret