Introducing the first purely serverless solution for fine-tuned LLMs - Predibase - Predibase

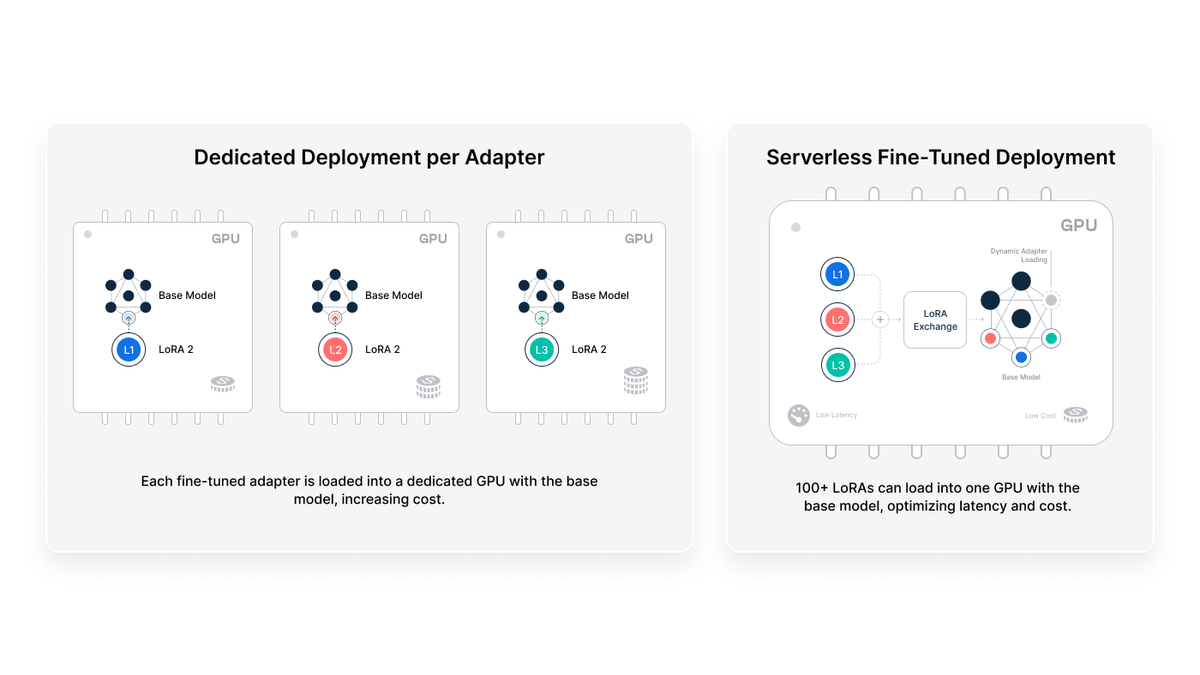

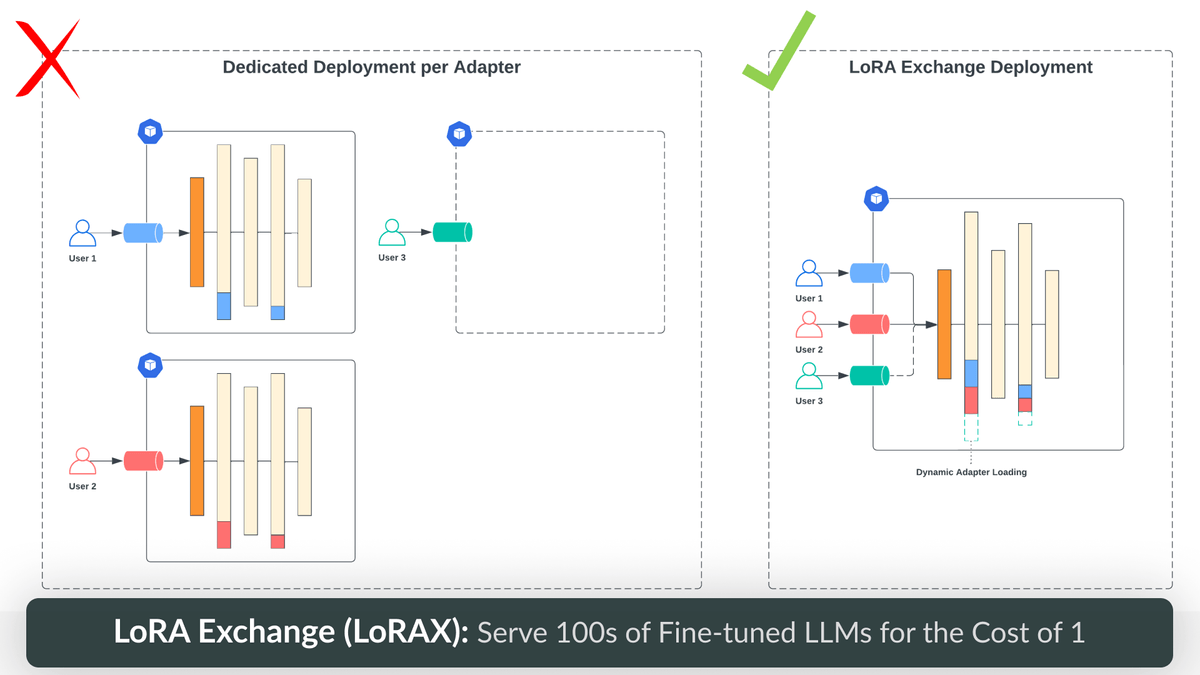

Serverless Fine-tuned Endpoints allow users to query their fine-tuned LLMs without spinning up a dedicated GPU deployment. Only pay for what you use, not for idle GPUs. Try it today with Predibase’s free trial!| predibase.com