Setting up Spark locally is not easy! Especially if you are simultaneously trying to learn Spark. If you > Don't know how to start working with Spark locally > Don't know what the recommended tools are to work with Spark (like which IDE or data storage table formats) > Try and try, and then give up, only to end up trying to use one of the cloud providers or give up altogether. This post is for you! You can have a fully functioning local Spark development environment with all the bells and whi...| www.startdataengineering.com

Working on a large codebase without any tests can be nerve-wracking. One wrong line of code or an in-conspicuous library update can bring down your whole production pipeline! Data pipelines start simple, so engineers skip tests, but the complexity increases rapidly after a while, and the lack of tests can grind down your feature delivery speed. It can be especially tricky to start testing if you are working on a large legacy codebase with few to no tests. In long-running data pipelines, bad c...| www.startdataengineering.com

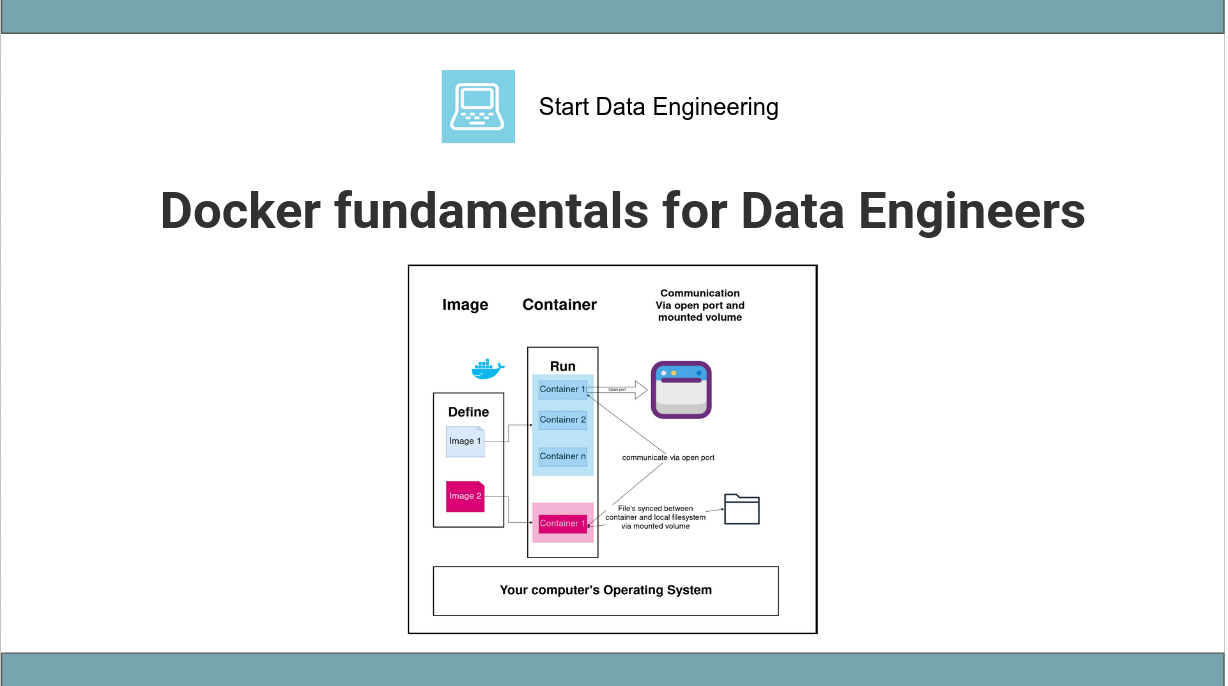

Docker can be overwhelming to start with. Most data projects use Docker to set up the data infrastructure locally (and often in production as well). Setting up data tools locally without Docker is (usually)a nightmare! The official Docker documentation, while extremely instructive, does not provide a simple guide covering the basics for setting up data infrastructure. With a good understanding of data components and their interactions combined with some networking knowledge, you can easily se...| www.startdataengineering.com