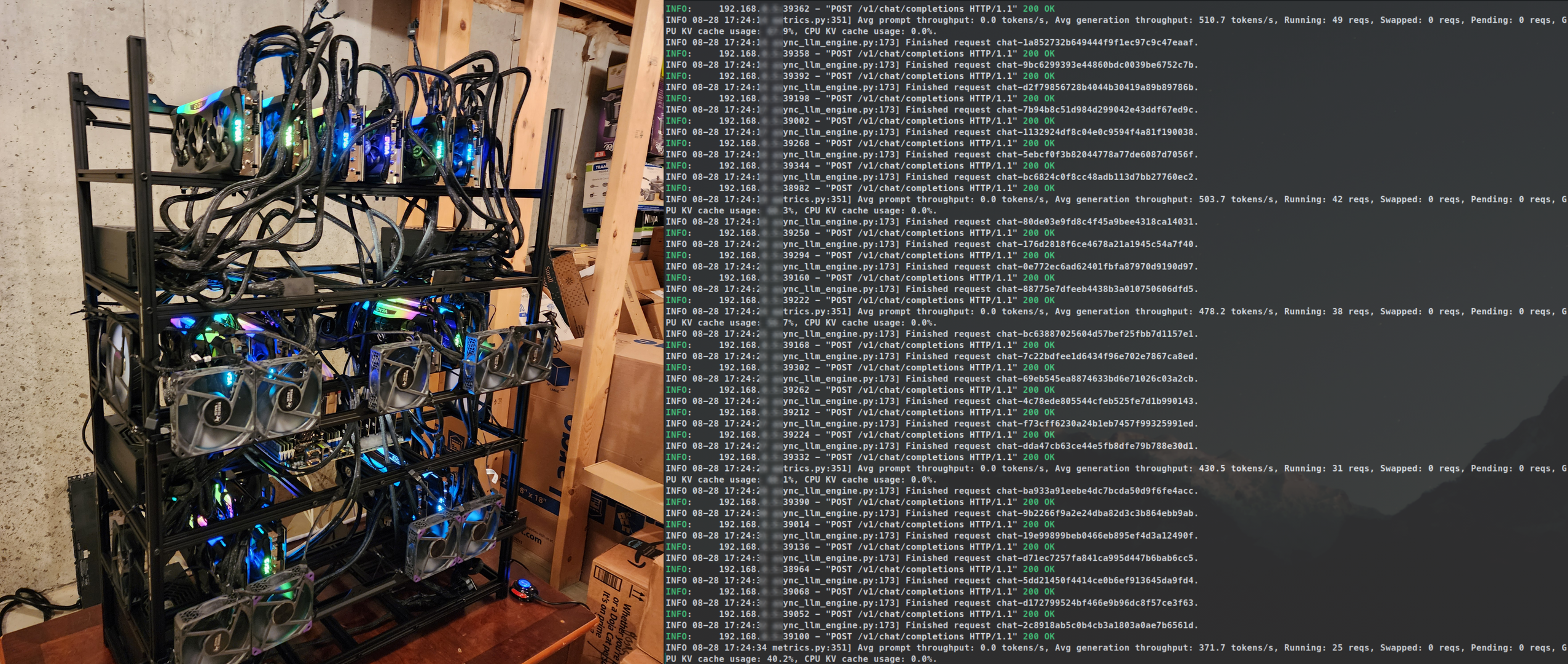

Stop Wasting Your Multi-GPU Setup With llama.cpp : Use vLLM or ExLlamaV2 for Tensor Parallelism · Osman's Odyssey: Byte & Build

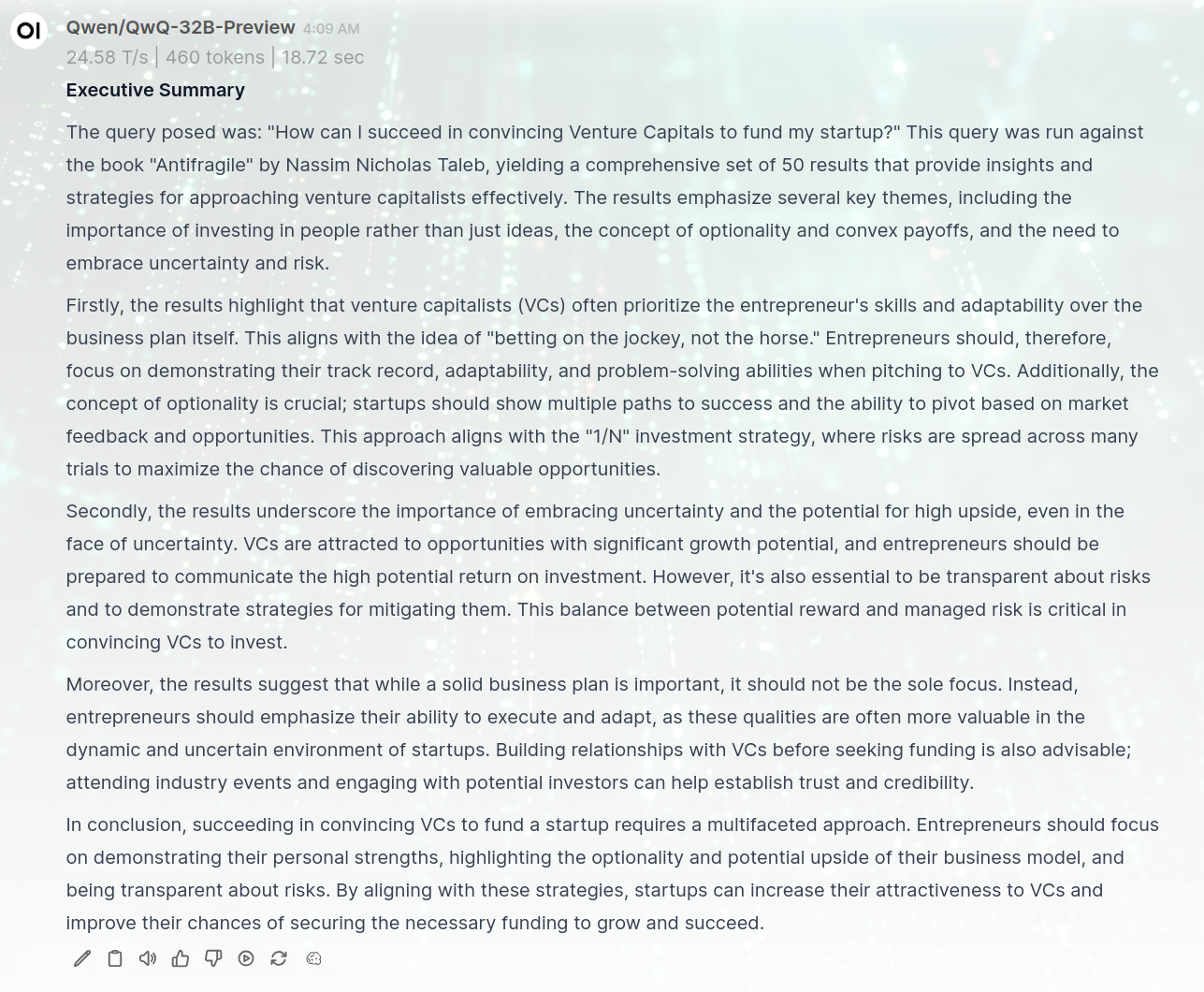

Exploring the intricacies of Inference Engines and why llama.cpp should be avoided when running Multi-GPU setups. Learn about Tensor Parallelism, the role of vLLM in batch inference, and why ExLlamaV2 has been a game-changer for GPU-optimized AI serving since it introduced Tensor Parallelism.| Osman's Odyssey: Byte & Build