Data Movement Bottlenecks to Large-Scale Model Training: Scaling Past 1e28 FLOP | Epoch AI

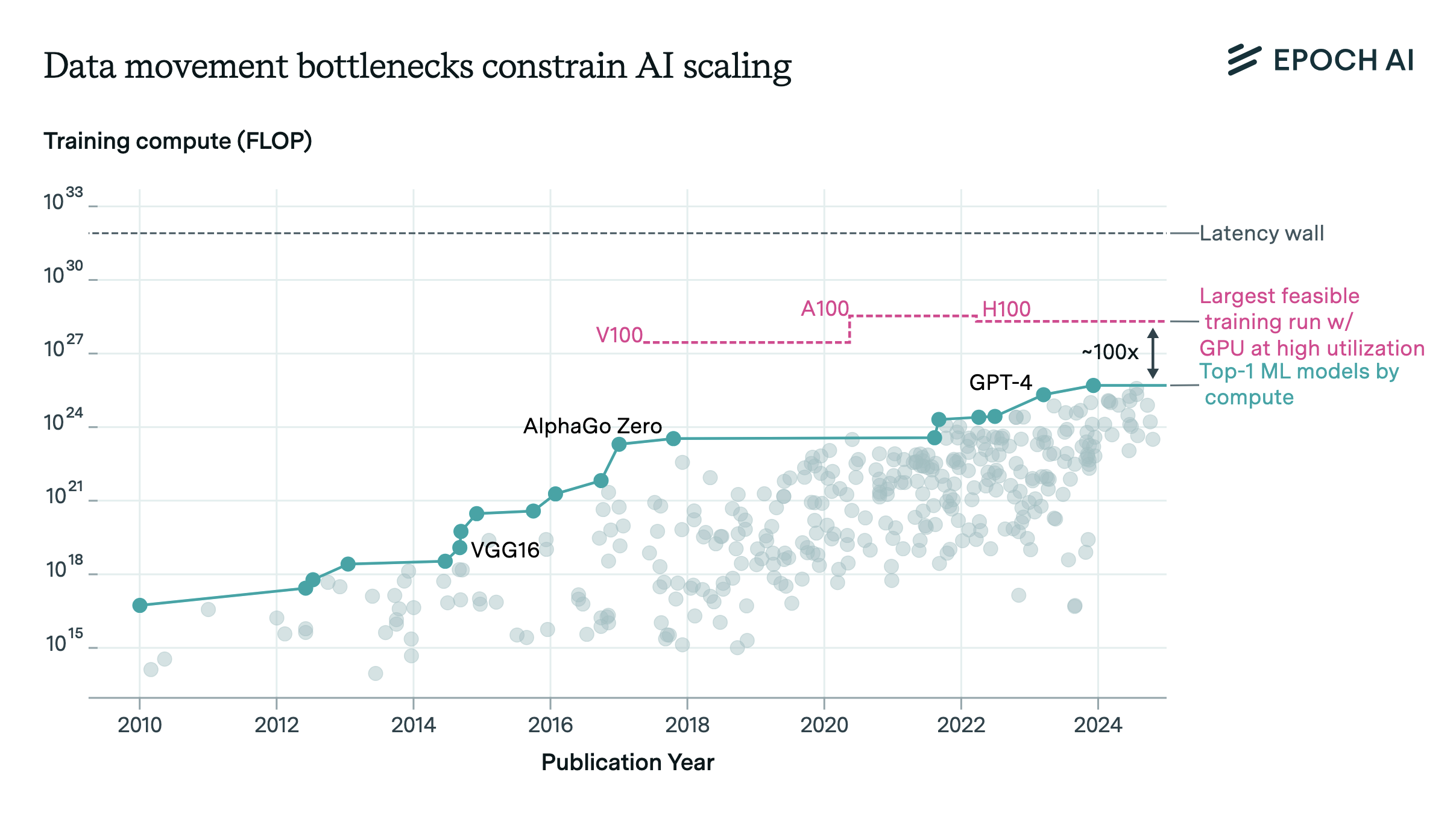

Data movement bottlenecks limit LLM scaling beyond 2e28 FLOP, with a “latency wall” at 2e31 FLOP. We may hit these in ~3 years. Aggressive batch size scaling could potentially overcome these limits.| Epoch AI