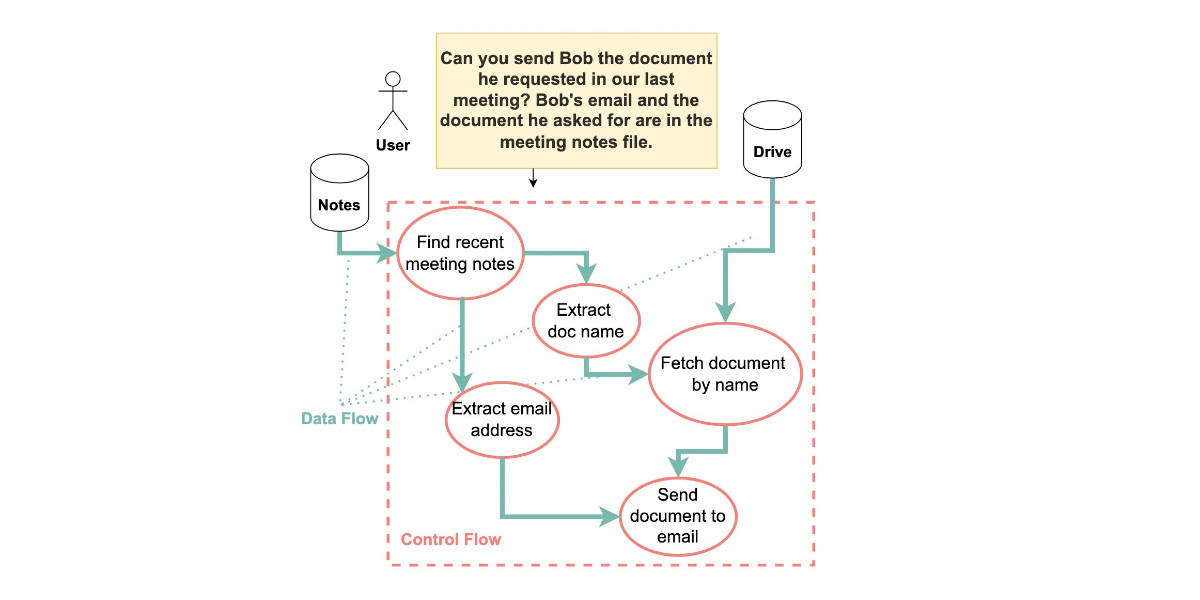

CaMeL offers a promising new direction for mitigating prompt injection attacks

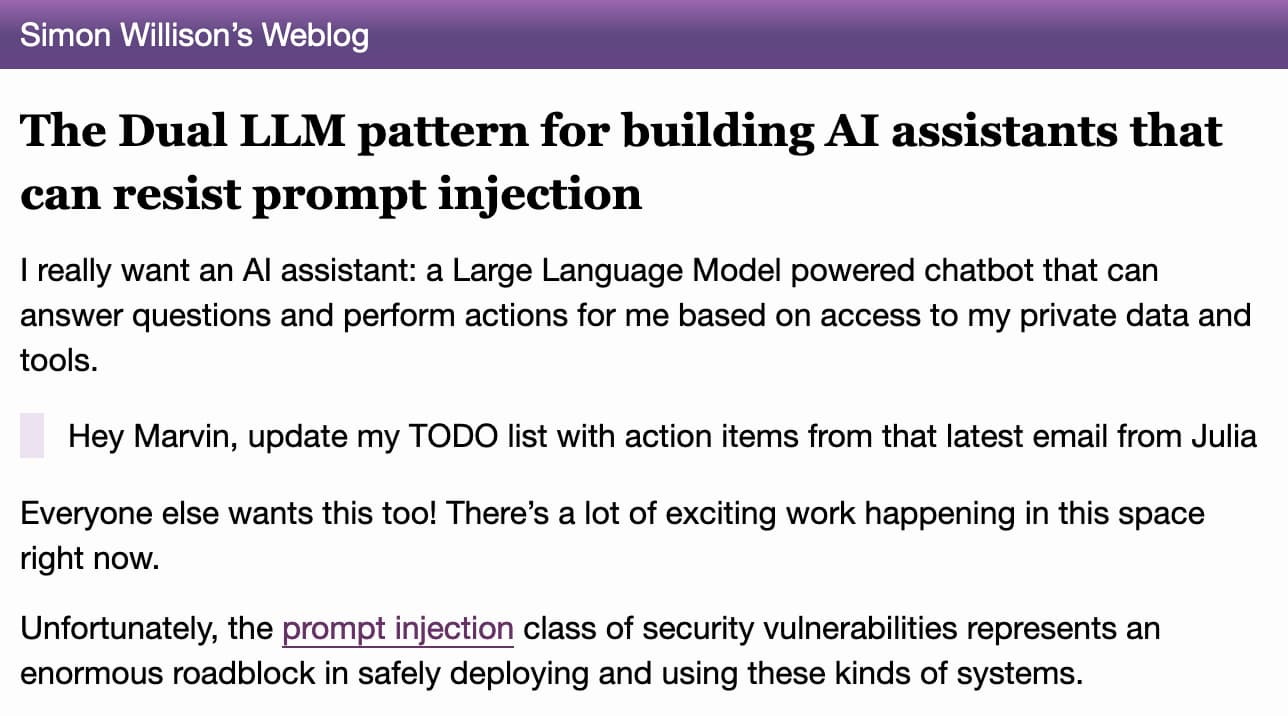

In the two and a half years that we’ve been talking about prompt injection attacks I’ve seen alarmingly little progress towards a robust solution. The new paper Defeating Prompt Injections …| Simon Willison’s Weblog