Supabase MCP can leak your entire SQL database

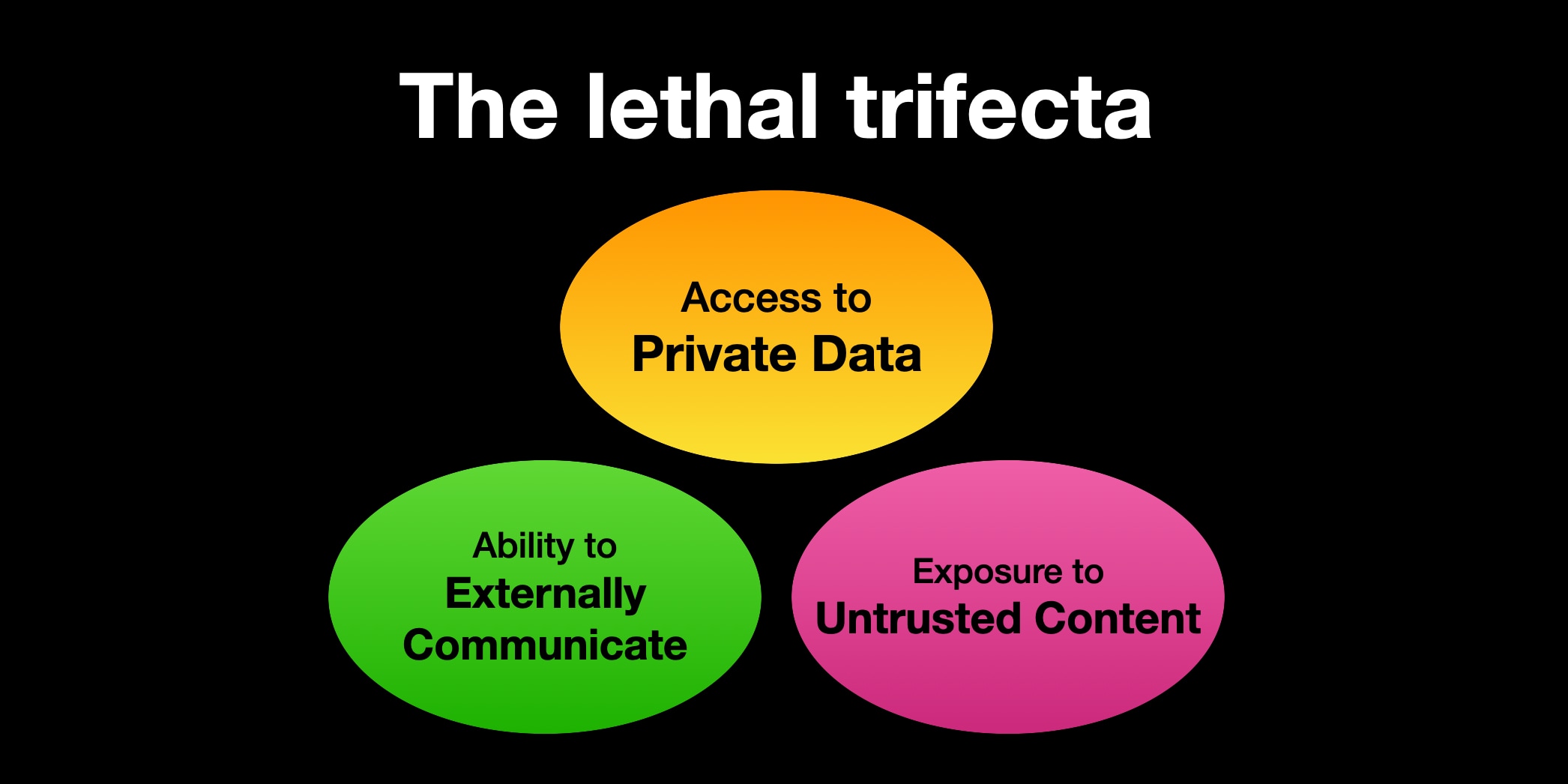

Here's yet another example of a lethal trifecta attack, where an LLM system combines access to private data, exposure to potentially malicious instructions and a mechanism to communicate data back …| Simon Willison’s Weblog