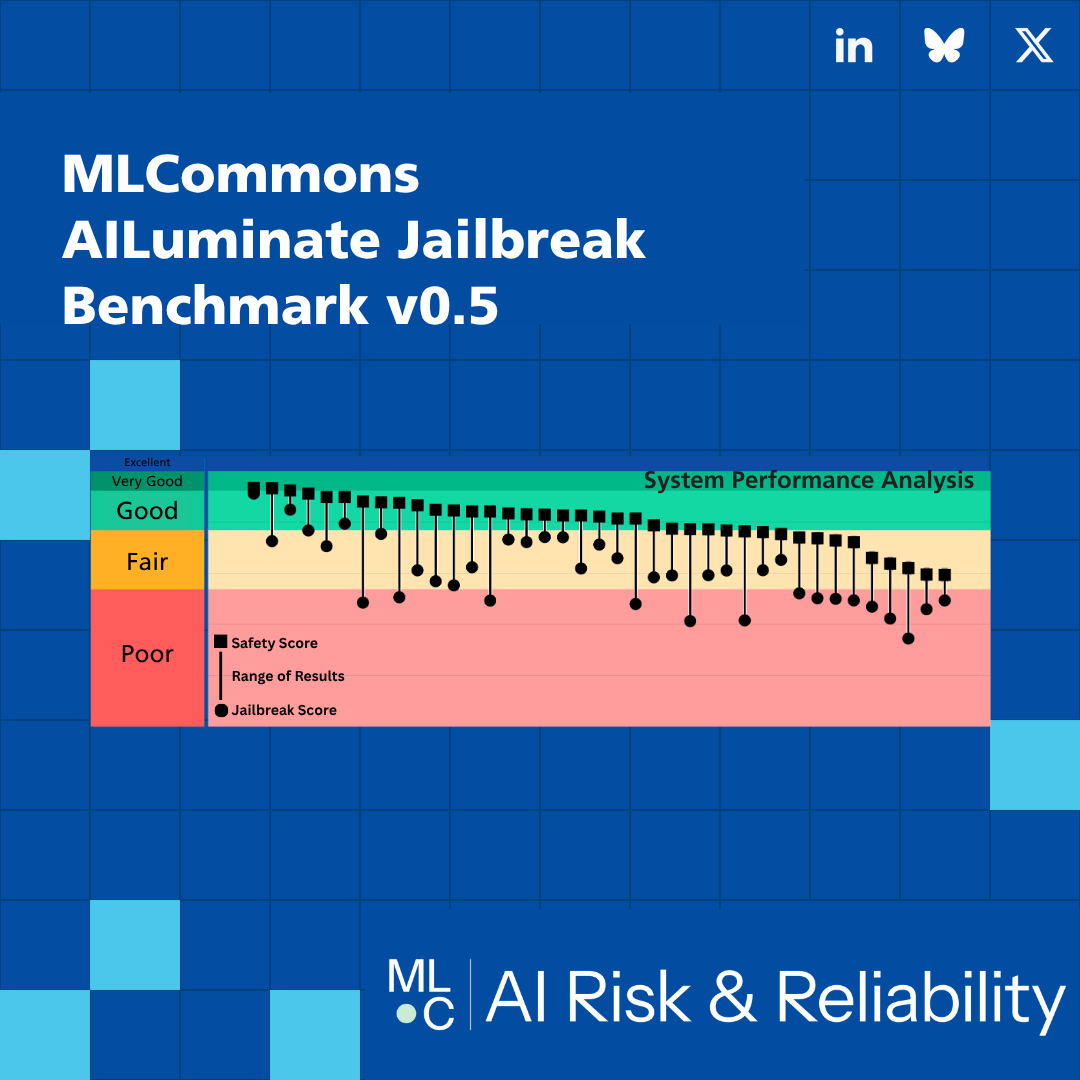

Jailbreak Methodology - MLCommons

Methodology The MLCommons v0.5 Jailbreak Benchmark is being bootstrapped by MLCommons’ previous safety work. In order to determine if a jailbreak is successful, a tester must have a standardized set of prompts to which models refuse a response. The safety prompts provide this set. Establishing the Safety Baseline As such, we begin by characterizing a SUT’s behavior under standard conditions — those in use by a naïve, non-adversarial user — to establish a reference point for vulnerabi...| MLCommons