Ptolemaic Thinking in the Age of AI

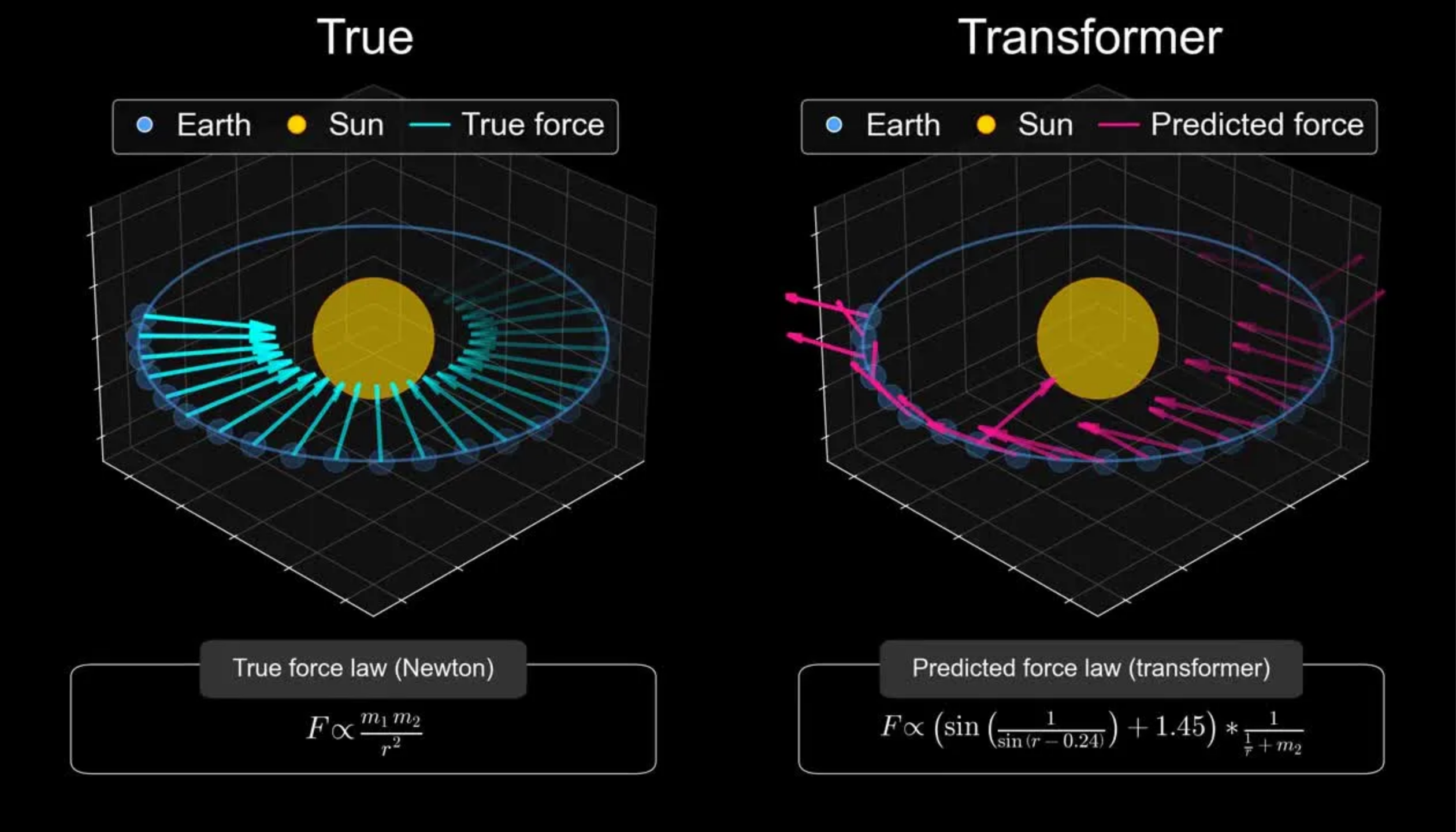

Of all the AI buzzwords out there, the word “model” would seem free of hyperbole compared to “superintelligent” or the “singularity.” Yet this innocuous-seeming word can mean two contradictory things, and AI companies are deliberately muddling the line between them. A recent Harvard/MIT study of simulating planetary orbits illustrates the contrast between what scientists consider a “world model” and what AI enthusiasts think transformers are generating.| Still Water Lab